The beginning of the end of World War II occurred 80 years ago Thursday, when roughly 160,000 Allied troops made landfall in Normandy on D-day. The initial battle against some 50,000 armed Germans resulted in thousands of American, British and Canadian casualties, many with grave injuries.

Who would care for them?

By June 6, 1944, the United States medical establishment had spent years preparing to treat these initial patients — and the legions of wounded warriors that were sure to follow.

The curriculum for medical schools was accelerated. Internship and residency training was compressed. Hundreds of thousands of women were enticed to enroll in nursing schools tuition-free.

Conscientious objectors — and others — were trained to serve as combat medics, becoming the first link in a newly developed “chain of evacuation” designed to get patients off the front lines and into hospitals with unprecedented efficiency. Medics capitalized on tools like penicillin, blood transfusions and airplanes outfitted as flying ambulances that hadn’t existed during World War I.

“The nature of warfare was very, very different in 1944,” said Dr. Leo A. Gordon, an affiliate faculty member in the Cedars-Sinai History of Medicine Program. “Therefore, the nature of medicine was very, very different.”

Gordon spoke with The Times about an aspect of World War II that’s often overlooked.

How did you become interested in the medical aspects of World War II?

In my surgical training, I spent a lot of time in a [Veterans Affairs] hospital in Boston. That was probably the start of what has really become a career-long interest in World War II in general and the medical aspects of how America prepared for the invasion.

As veterans aged and the memory of June 6, 1944, has lessened its impact, it just stimulated me to keep up that particular interest.

How did the U.S. gear up to handle the medical aspects of the war?

After the Pearl Harbor attack on Dec. 7, 1941, it was clear to the medical establishment that we were going to need more doctors, more nurses and more front-line combat medics.

The U.S. surgeon general established a division to speed up the medical educational process. The 247 medical schools that existed at that time all had accelerated graduation programs that shrunk a year [of instruction] down to nine months. In addition, the Assn. of American Medical Colleges shrunk down the internship year to nine months, and all residencies were abbreviated to two years maximum, no matter what the specialty was.

When you were done with your training, there was the 50-50 program — 50% would be drafted and 50% would be returned to the community.

Since most of the injuries were going to be traumatic injuries, you had a very active role by the American College of Surgeons. They established a national roadshow and showed doctors how to deal with the injuries with which they were going to be confronted — fractures, burns and resuscitations.

How did they know what kinds of war wounds to prepare for?

They prepared for dealing with similar trauma to what they had seen in their practices, but on a larger scale. There were also other developments that were going to aid them in their ability to take care of wounded soldiers.

What kind of developments?

Number one was the availability of penicillin. Infection after wounds was a terrible problem in World War I and early in World War II until penicillin became widely available in 1943.

The problem was you had 200,000 men between 45 and 18 — many of whom were 16 and lied about their age to get into the military — who were headed to Europe to liberate the women of Europe. So venereal disease became a widespread and debilitating problem for the Army, the Navy and the Air Force. The dilemma for penicillin was, do you bring it to the battlefield or do you bring it to the bordello?

There was a large public relations poster effort throughout the country and on Army bases throughout Europe for preventing venereal disease because penicillin should go to wounded soldiers.

Were there other changes in the way injuries were treated?

In World War I, a guy gets shot and you put him on a stretcher, and it’s a long trek to the nearest hospital.

For World War II, the armed forces developed the chain of evacuation. It started with a combat medic. That fed into a system that went from a field hospital to a larger hospital to a general hospital and ultimately, if needed, to evacuation to England. It saved a lot of lives.

Were combat medics new in World War II?

The job existed before, but it became formalized. It was a very interesting nine-month tour of duty in military service, tactical training, and of course the medical aspect of evaluating injuries, administering morphine, splinting and stopping bleeding. They had the availability of plasma transfusions to support shock.

A lot of them were conscientious objectors. They were in basic training next to people who are going to carry a rifle and kill people. There was a friction between the two up until the time somebody got injured and started yelling, “Medic!”

What about nurses?

Frances Payne Bolton was a congresswoman from Ohio. She said essentially, “The doctors are going to do this and that. What about the nurses?” So she put through the Bolton Act of 1943, which created the U.S. Cadet Nurse Corps. It was essentially a GI Bill for nurses. This was a focused, expedited program, free of charge.

Prior to Pearl Harbor, there were only about 19,000 Army nurses. By the end of the war, if you combine the European theater with the Pacific theater, there were hundreds of thousands of nurses.

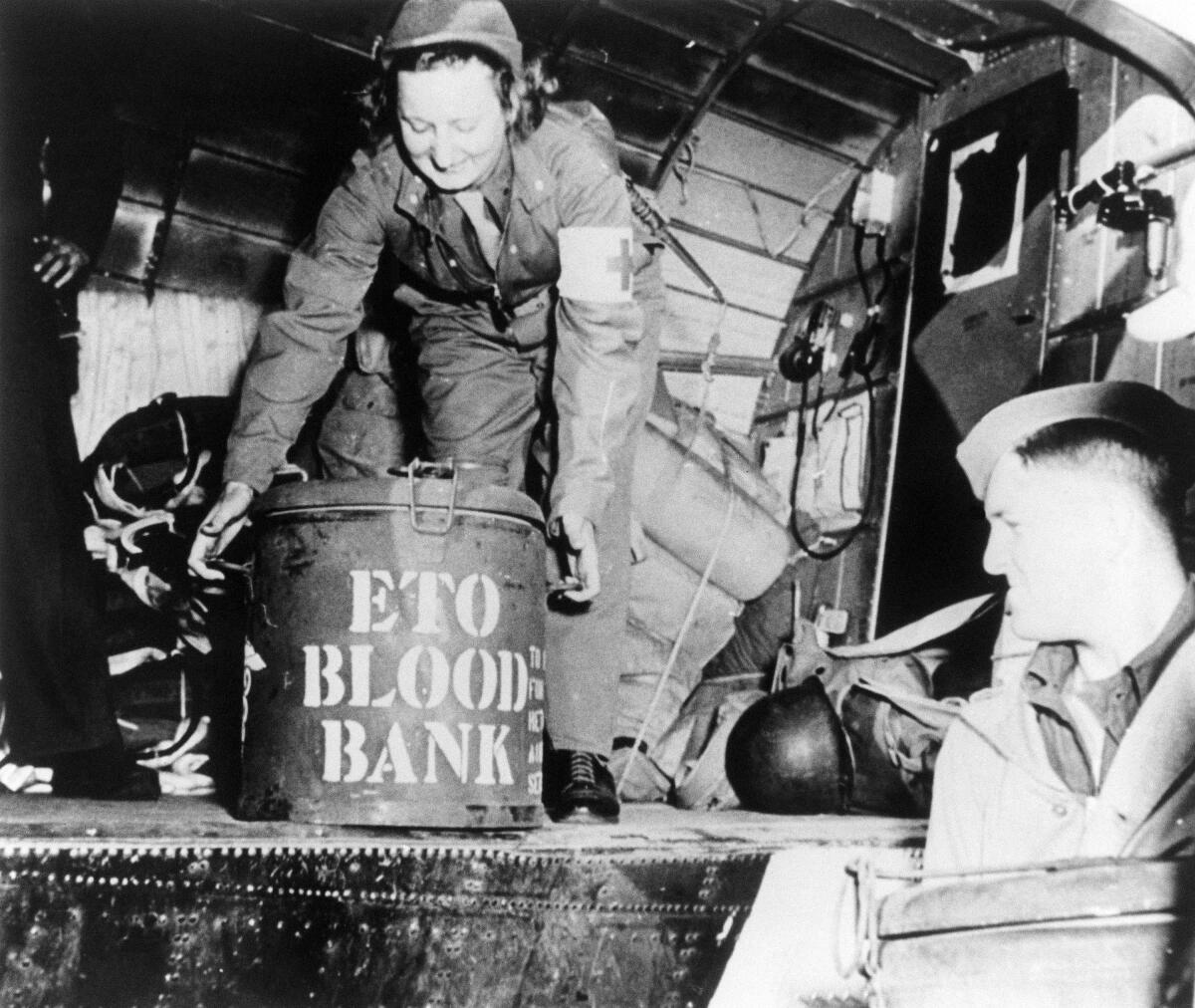

Lt. Stasia Pejko makes a last-minute check on blood bound for France on June 14, 1944.

(Associated Press)

What about other new roles?

This was the first time in warfare that air evacuation was used to a large degree. That gave rise to the creation of the flight nurse, who had to be aware of many things other than caring for a patient on the ground. They had to learn crash survival. They had to learn how to deal with the effects of high altitude.

Did any of these innovations in medical care return to the States after the war?

The overarching theme in the history of military medicine is that once a war ended, there was very little interest in using that event for military progress — except for World War II.

The advances that came out of World War II start with penicillin. Number two was the management of chest injuries, abdominal injuries and vascular injuries.

Number three was advances in the use of plasma and blood banking, particularly through the work of Dr. Charles Drew, which is a story in and of itself. His contributions saved innumerable lives.

Number four was the explosive growth of the Veterans Administration and the veterans hospitals. You had tens of thousands of people who served the country coming back home, and the VA system was going to have to take care of them.

Number five was the involvement of the government in medical research. Before World War II, it was unusual for the government to fund medical research.

And the sixth advancement was the increase in knowledge of the neuropsychiatric effects of war. It started off as battle fatigue, and then it evolved into shell shock. Later it morphed into PTSD.

Has the U.S. medical establishment accomplished anything of this magnitude since World War II?

I’m not an expert in military warfare, but now with drones and computers and special operations, I can hardly imagine so many people headed for a beach in hand-to-hand combat.

This interview has been edited for length and clarity.